Designing an Ethical Algorithm - Self Driving Cars

19 Apr 2019Background

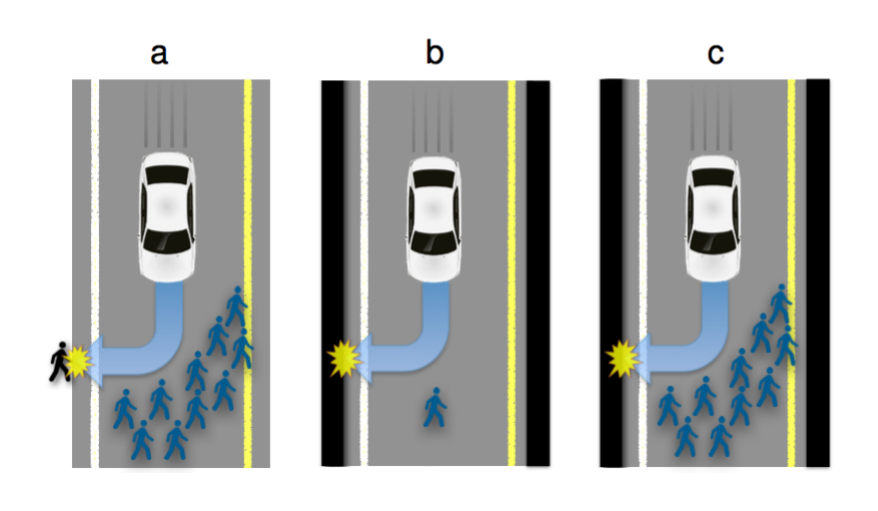

Autonomous cars as a technology is approaching an ethical dilemma. The problem being that eventually cars will need to be able to make decisions where all options are imperfect. The general idea is that a car may need to decide who will live and who will die. This could be the situation where a car decide whether to run over two people in the road or crash into a wall, killing one occupant. It may seem obvious to choose to have one die instead of two; however, it is not always so simple. For example this could cause consumers to not purchase autonomous cars since they would not want to use a car that would sacrifice themselves. This would then lead to overall more deaths as autonomous cars would theoretically be much safer that standard cars.

Ethical Obligations in Design

The IEEE Computer Society has a code of ethics that help engineers keep their designs within acceptable ethical restrictions. These have been organized into eight principles that can be viewed at https://www.computer.org/education/code-of-ethics. For me I would simply design it so that it does its best to minimize overall deaths. There was a study done where they found people were more apt to encouraging use of these types of cars as long as they themselves were not driving them. I feel like more research would need to be done on this topic regarding how much this would actually effect the decision of purchasing a self driving car over a standard one. I personally have the feeling that there wouldn’t be a noticable change in purchases and thus a simple “kill least” algorithm would be fine. I am of the opinion that making the algorithm too complex would bring a multitude of potential biases. This philosophy is similar to Occam’s Razor which prefers simpler explanations to phenomena.

Reasoning

My choice aligns most with principle 1 from the mentioned code of ethics. This principle is that engineers are to act consistently with public interests. This is because I believe my design would result in the lowest overall deaths. There is some conflict with principle 2, which requires engineers to act in the best interests of their client and employer, while remaining consistent with public interests. It could be argued that my algorith could lead to decreased sales for the employer or disregard the consumer too much. I would counter this by focusing on the later half of the principle which requires remaining within public interests.

A more complex system may bring in biases such as a decision to prefer hitting a poor person over a wealthy person. There could be reason for implementing this design, but it may also be the result of bias. For example a software engineer or whoever may be making the design decision, may themselves be a wealthy person. This has issues regarding principle 4: judgement, which requires engineers to relieve themselves of all biases in their judgement.